Artificial Intelligence Resources

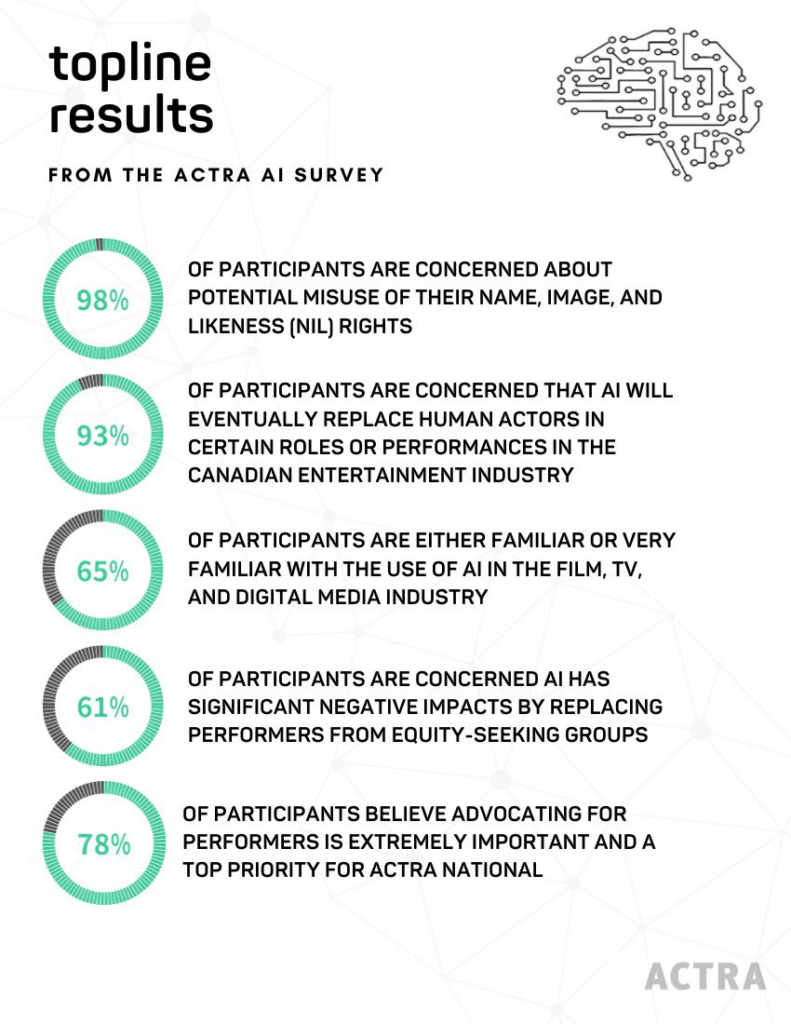

The use of Artificial Intelligence (AI) technology is the latest disruptor in the screen-based industry. While there are many advantages to AI and AI tools, legislation needs to be in place to ensure AI is not used for nefarious purposes. For artists, it is imperative performers receive compensation as well as provide consent and have control over the use of their image or voice.

FAQs

Get Involved

Join ACTRA Toronto’s campaign asking the Government of Ontario to amend the Working for Workers Act to include protections for workers in Ontario from AI as well as ensure the arts, including the film, television and digital media industry, are included in Ontario’s Trustworthy Artificial Intelligence (AI) Framework to support AI use that is accountable, safe and rights based.

Join Equity U.K.’s campaign to strengthen performers’ rights in response to the rise of AI across the U.K. entertainment industry. The goal of the campaign is get the U.K. government to recognize the threat posed by AI, update the law, and to get industry leaders onside so that artists can feel safe in the knowledge that their intellectual property is protected. U.K.-based individuals are invited to take action by lobbying your Member of Parliament

The Human Artistry Campaign has endorsed this U.S. bill, which would establish a federal right to Americans’ own voices and likenesses and establish a system to fight back against nonconsensual AI-generated deepfakes and voice clones. U.S.-based individuals are invited to email their U.S. Representatives to encourage them to support the bill. ACTRA Toronto is a proud supporter of the Human Artistry Campaign.

The Human Artistry Campaign has endorsed this U.S. bill, which would establish safeguards to protect against generative AI abuses that stem from the unauthorized copying of a person’s individuality and result in deepfakes, voice clones, and non-consensual impersonations. U.S.-based individuals are invited to email their U.S. Representatives to encourage them to support this bill. ACTRA Toronto is a proud supporter of the Human Artistry Campaign.

NAVA’s #fAIrVoices campaign focuses on three aspects needed for the ethical use of AI (consent, compensation, and control) for voice actors. The campaign also includes a pledge from five of the most prominent online casting companies in voiceover, promising to never use voice actors’ audio files for the creation and/or training of AI without their consent. ACTRA Toronto is a proud supporter of NAVA and the #fAIrVoices campaign.

United Voice Artists (UVA) is asking anyone who values the authenticity and emotional connection brought by human voice actors over AI-generated voices to sign this petition. ACTRA Toronto is a proud member of the UVA.

ACTRA

ACTRA Toronto AI Sub-Committee

About

The existential threat AI poses to our industry is well documented and increasing exponentially as the technology evolves. The ACTRA Toronto AI Sub-Committee was established in the summer of 2023 to attempt to address concerns of membership and increase awareness. This requires a two-pronged attack: Lobbying for legislation at all levels of government, and clearly stated AI protections in ACTRA’s collective bargaining agreements.

Mandate

To investigate and recommend safe protocols and protections around the implementation and use of AI regarding performers image, likeness, voice, or character voices, or any other intellectual property (biometrics) that a performer would bring to a production or role.

Co-Chairs

John Cleland

Cory Doran

Get Involved

Interested in joining or learning more about the AI Sub-Committee? Please fill out the form below. All submissions are vetted by ACTRA Toronto.

The Three C’s

AI has woven itself into the fabric of our daily experiences, from personalized recommendations to advanced automation. However, this transformative technology also brings forth ethical considerations for performers that demand our attention. The need for ethical AI arises from the potential consequences of its use, ranging from biased algorithms to privacy infringements. By prioritizing concepts like Consent, Compensation, and Control, we can shape an AI-driven future that respects individual performer rights, promotes fairness of use, and aligns with the values of a diverse and interconnected global film industry.

CONSENT

Performers should have the right to consent to, and be credited for, the use of their NIL Rights* in new works in the training of AI models.

COMPENSATION

Performers should be compensated for all AI uses of their NIL Rights.*

CONTROL

Performers should be able to control the use of their NIL Rights.* And once a digital replica is made, any company dealing with this data must commit to safe storage and tracking of these files.

*NIL Rights: collective term encompassing personal voices, sound effects, actions, behaviour, images, likenesses and personalities.

Canadian Legal Landscape

Federal

Consultation: Help define the next chapter of Canada’s AI leadership

The federal government is conducting a 30-day national consultation to build a stronger AI strategy. Now more than ever, they need your voice in building laws that guarantee Consent, Compensation, and Control over AI use. Help raise awareness of how AI is already affecting the Film and Television sector by completing this survey. ACTRA Toronto has created this consultation guide to assist you in completing the survey. Consultation period closed October 31, 2025.

Canadian Artificial Intelligence Safety Institute

The Government of Canada launched the Canadian Artificial Intelligence Safety Institute (CAISI) to leverage Canada’s world-leading AI research ecosystem and talent base to advance the understanding of risks associated with advanced AI systems and to drive the development of measures to address those risks. CAISI will conduct research under two streams: Applied and investigator-led research; and Government-directed projects.

Consultation on Copyright in the Age of Generative Artificial Intelligence

The department of Innovation, Science and Economic Development Canada conducted a consultation on the impacts of recent developments in AI on the creative industries and the economic impacts that these technologies have, or could have, on Canadians, and it will look at whether change is required to further improve or reinforce copyright policy for a modern, evolving Canadian economy. Consultation period closed January 15, 2024.

Assessing the Impact of Canada’s Proposed Bill C-27, Artificial Intelligence and Data Act

ACTRA National’s submitted recommendations to the the Standing Committee on Industry and Technology’s Artificial Intelligence and Data Act consultation to safeguard the fundamental needs of Canadian performers: respect, fair wages, and protection against abuse. (September 8, 2023)

Consultation on a Modern Copyright Framework for Artificial Intelligence and the Internet of Things

ACTRA National’s submission to Canadian Heritage and Innovation, Science and Industry focuses on the extent to which copyright-protected works are integrated in AI applications and the consequences of the misuse of AI technology. (September 17, 2021)

Provincial

Human Rights Impact Assessment (HIRA) for AI Technologies

The Law Commission of Ontario and the Ontario Human Rights Commission jointly created an AI impact assessment tool to provide organizations with a method to assess AI systems for compliance with human rights obligations. The purpose of the Human Rights Impact Assessment for AI Technologies is to assist developers and administrators of AI systems to identify, assess, minimize or avoid discrimination and uphold human rights obligations throughout the lifecycle of an AI system.

Trustworthy Artificial Intelligence (AI) Framework

The Government of Ontario is developing the province’s first Trustworthy AI Framework, which will comprise of policies, products and guidance to set out risk-based rules for the transparent, responsible and accountable use of AI by the Ontario government.

Global Legislative Landscape

The growing use of unregulated generative Artificial Intelligence (AI) in Canada’s film and television industry and its potential impact on the livelihoods of Canadian workers is a growing concern. While our federal government boasts Canada as being “one of the first countries in the world to propose a law to regulate AI,” it is has failed to pass any meaningful legislation, specifically regulations to protect performers working in our screen-based industry.

Below is an overview of the state of AI legislation in select G20 countries:

Resources

MILA is a community of over 1,200 researchers in machine learning and interdisciplinary teams committed to advancing artificial intelligence for the benefit of all. | Human Artistry Campaign outlines the principles of how we can responsibly use artificial intelligence – to support human creativity and accomplishment with respect to the inimitable value of human artistry and expression. | LOK VOX, in partnership with NAVA, is determined to make sure artists never lose their voice. Learn how you can unlock the tools to combat AI Generated Vocal Piracy. | |

Montreal International Center of Expertise in Artificial Intelligence (CEIMIA) is an international leader and catalyst for innovative, socially responsible and high-impact projects in applied artificial intelligence. | National Association of Voice Actors (NAVA) is a non-profit association created to advocate and promote the advancement of the voice acting industry. ACTRA Toronto is a proud supporter of NAVA. | The United Nations (UN) Secretary-General has convened a multi-stakeholder High-level Advisory Body (HLAB) on AI to undertake analysis and advance recommendations for the international governance of AI. | UVA (United Voice Artists) is a worldwide group of voice acting guilds, associations, and unions whose mission is to protect and preserve the artistic heritage of professional voice-over artists. ACTRA Toronto is proud to be a founding member. |

Research

In recent years, artificial intelligence (AI) and more specifically generative AI (GenAI) has developed at an exponential rate. GenAI is AI that is capable of generating content, such as text, images, videos or audio when prompted by a user. January 14, 2025

Media Interviews

Rabble.ca: Mr. Beast, AI in media and the fight to protect performers on set. Rabble editor Nick Seebruch sits down with Alistair Hepburn, executive director of ACTRA Toronto, to discuss the do-not-work notice placed on Mr. Beast’s Beast Games in Toronto and other actions ACTRA is taking to protect Canadian performers.

Industry Updates

AI in the news

Amazon has quietly pulled the AI dubs from several English-dubbed versions of popular anime shows after a fallout on social media from furious fans. | The United States is stealing Canada’s future, and nobody seems to notice (October 13, 2025) While the federal government appears determined to avoid repeating the mistakes of the past, Canada’s new AI strategy task force may already have a blind spot. In the AI economy, value is being created not just by established players, but by individual talent, pre-company, pre-product and pre-revenue. That’s the race Canada risks losing. | The federal government is launching a task force on artificial intelligence, which will suggest policies to improve research, talent, adoption and commercialization of AI in Canada, among other goals. The government will deliver an updated national strategy to support and develop the sector by the end of 2025. | “The Minister Will Call You”: AI Voice Clone Job Scam Steals Bs 5 Million (September 23, 2025) This article examines how a criminal organization in Bolivia managed to defraud more than Bs 5 million by impersonating the Minister of Education. From inside prison, one of the ringleaders used AI to clone the minister’s voice, tricking victims into believing they were being personally contacte with job offers in the Ministry of Education. | Generative AI chatbots can amplify delusions in people who are already vulnerable, as dangerous ideas go unchallenged and may even be reinforced. |

If Anyone Builds it, Everyone Dies review – how AI could kill us all (September 22, 2025) A book review of If Anyone Builds it, Everyone Dies by Eliezer Yudkowsky and Nate Soares. The book argues that superhuman AI, with its own goals, would conflict with humans and crush them, leading to extinction. | Italy first in EU to pass comprehensive law regulating use of AI (September 18, 2025) Italy has become the first country in the EU to approve a comprehensive law regulating the use of artificial intelligence, including imposing prison terms on those who use the technology to cause harm, such as generating deepfakes, and limiting child access. | The federal government says it plans to launch a public registry to keep Canadians in the loop on its growing use of AI. The registry will also help the government keep track of AI projects underway. | Is AI the New Frontier of Women’s Oppression? (September 9, 2025) In her new book, feminist author Laura Bates explores how sexbots, AI assistants, and deepfakes are reinventing misogyny and harming women. | AI and Copyright – Training AI with copyrighted material (September 8, 2025) Copyright and AI is a three-part podcast series from Fasken’s Perspectives podcast, exploring how Canadian law is grappling with the rise of generative AI. |

Industry reps discussed the challenges for the regulation of AI at the CineLink industry strand of the Sarajevo Film Fest following the “tsunami” of AI use cases and the associated risks for creators and copyright protections. | The human voiceover artists behind AI voices are grappling with the choice to embrace the gigs and earn a living, or pass on potentially life-changing opportunities from Big Tech. | As AI voice cloning in films becomes a reality, India’s dubbing artists are demanding consent, credit and fair pay. | According to OpenAI CEO Sam Altman, the AI industry hasn’t yet figured out how to protect user privacy when it comes to more sensitive conversations, because there’s no doctor-patient confidentiality when your doc is an AI. | The Code is a guidebook designed to show General Purpose AI providers how to comply with the landmark EU AI Act by translating legal obligations into practical steps. One of the guide’s chapters focuses exclusively on copyright. |

This article further explores Disney and Universal’s copyright lawsuit against AI company Midjourney. Unlike other lawsuits focusing on avoiding scraping copyright-protected works altogether, Disney and Universal contend Midjourney has already demonstrated the technical ability to restrict certain types of content and therefore could implement similar safeguards to prevent the replication of recognizable copyrighted material. | AI Visual Effects and Copyright Infringement (June 30, 2025) This article examines a recent decision by the Beijing Tongzhou Court that concluded it infringes on copyright to make an unauthorized copy of artwork and to use that as a base image for the creation of a commercial product that is substantially similar to the original work using an AI tool to do minor visual editing to produce the commercial product. | Learn more about the proposal Denmark has put forth that would give every one of its citizens the legal ground to go after someone who uses their image without their consent. | Disney and Universal joined forces in a lawsuit against AI image creator Midjourney, claiming the company used and distributed AI-generated characters from the movie studios and alleges that Midjourney disregarded requests to stop. | Natasha Lyonne’s Company Uses AI to Make Films Ethically (May 5, 2025) A look at the partnership between Asteria and Moonvalley – two companies using AI ethically to write, direct, animate, score, and churn out a feature film without using stolen material. |

BBC Recreates Agatha Christie Using AI (April 30, 2025) BBC Studios has worked with Agatha Christie Limited to forge a writing course led by Christie for its BBC Maestro platform. The platform has teamed with Christie’s estate, a professional actress and VFX artists to recreate her voice and likeness using AI-enhanced tech and restored audio recordings. | Opinion: AI will ruin art, and it will ruin the sparkle in our lives (April 27, 2025) How a marketing ploy to promote the latest iteration of a generative AI-based image generation tool released by OpenAI unleashed discussion about intellectual property protection regimes and how, without some form of protection for the innovator, there will be no incentives to create new innovations. | The studio, which will produce films at budgets of $500,000 or less, plans to use scripts and performances from human writers and actors as the basis for its films, with the voices and expressions from performers forming the basis for the AI-generated footage. | YouTube Partners With CAA to Protect Artists From Unauthorized AI Use (December 17, 2024) Under this first-of-its-kind deal, CAA talent will be able to identify and manage AI-generated content featuring their faces on YouTube at scale. | Legality of Data Scraping Using AI Revisiting In Canada (December 13, 2024) Canadian Courts are once again due to rule on the use of AI technology in scraping data from third-party websites. The Canadian Legal Information Institute (CanLII) filed a claim with the Supreme Court of British Columbia alleging the Defendants violated the CanLII website’s terms of use, which expressly prohibits the bulk downloading and web scraping of the CanLII website without permission or a license. |

I Went to the Premiere of the First Commercially Streaming AI-Generated Movies (December 11, 2024) A journalist shares their experience attending a movie premiere, hosted by TLC (the largest TV manufacturer on Earth), as part of a pilot program designed to normalize AI movies and TV shows for an audience that it plans to monetize explicitly with targeted advertising and whose internal data suggests that the people who watch its free television streaming network are too lazy to change the channel. | The public launch OpenAI’s text-to-video generator tool Sora comes as the entertainment industry grapples with deployment of technology potentially capable of slashing productions costs. While there are legal and labour protections, text-to-video tools are expected to have major applications in areas like visual effects and animation with some industry folk having already adopted the technology into their workflows. |  | OpenAI CEO Sam Altman Says “New Economic Models” Needed for Creators in AI World (December 4, 2024) | |

Forget Sora — a new AI video model is one of the best I've ever seen (December 4, 2024) | Coca Cola’s AI-Generated Ad Controversy, Explained (November 16, 2024) | Actor’s experience of AI gone wrong (October 17, 2024) | Gavin Newsom Vetoes AI Safety Bill, Which Had Backing of SAG-AFTRA (September 29, 2024) | |

United Nations Wants AI to be Handled With Same Urgency as Climate Change (September 25, 2024) | New California AI Legislation Affecting Hollywood (September 20, 2024) | With AI, Dead Celebrities Are Working Again—And Making Millions (September 19, 2024) | Union Negotiates AI Rules (September 16, 2024) | |

Future-Proofing Creativity: Responding to AI’s Game-Changing Impact (August 22, 2024) | ||||

Artists Score Major Win in Copyright Case Against AI Art Generators (August 13, 2024) | Hollywood's Divide on Artificial Intelligence Is Only Growing (August 6, 2024) | |||

We need more control over AI’s influence on our stories (February 22, 2024) | Canadian TV, film, music industries ask MPs for protection against AI (February 12, 2024) | The Hollywood Jobs Most at Risk From AI (January 30, 2024) | Four AI trends to watch in 2024 (January 19, 2024) | |

US regulator denies Apple, Disney bids to skip votes on AI (January 4, 2024) | The Avatar effect - artificial intelligence and insurance talent (December 1, 2023) | Why the Hollywood Acting Game is about to change forever! (June 28, 2023) |